AdsYou

User Research, UX Design

COURSE NAME

05-610 User Research and Evaluation

AdsYou is an advertisement personalization extension for TikTok. Its simple, intuitive interface helps users easily find and customize their ads. It enhances user experience by promoting relevant content and minimizing harmful bias.

COLLABORATORS

Qiaoqiao Ma, Penghua Zhou

PROJECT YEAR

2023

The challenge

Bias brings users convenience through accurate recommendations while potentially harming them from different perspectives. How does harmful biases in TikTok’s Algorithmic Systems affect user experience?

Problem Statement - Usability test on Tiktok's ad

We tested the usability on current TikTok functionalities to find the bias issue among the users.

1

Racial Bias

2

Gender Bias

3

Brand Bias

4

Need Bias

5

Negative Action to Biased Ads

TikTok only recommend posts about users' own race to experienced participants but recommend posts of other races to the naive participants.

Some participants swipe away the other races’ posts because they feel these posts don’t fit their lifestyle.

Some participants swipe away the other races’ posts because they feel these posts don’t fit their lifestyle.

The male participants got advertisements about sport shoes, games and sexual contexts, while the female participants got advertisements about cosmetics and shopping malls.

TikTok only recommends certain brands of products to participants, which including electronic device and food in our test.

Participants kept receiving ads on the products they don’t want or need to buy.

Even though participants pressed "not interested" to these items, TikTok still recommended these items to them.

Even though participants pressed "not interested" to these items, TikTok still recommended these items to them.

Most participants only stay a short time on biased advertisement and swiped them away.

Few of them tried to disinterest the first few biased advertisements they saw but gave up after a while.

Few of them tried to disinterest the first few biased advertisements they saw but gave up after a while.

RESEARCH GOALS

How might we support users by personalizing their advertisement settings while facing potential algorithm bias?

Qualitative Analysis

We have conducted 3 rounds of interview, including a Think-aloud protocol, a pilot testing interview, and a semi-structured interview with direct-storytelling method on 3 participants.

We collected interpretation notes from those interviews, analyzed them through Affinity Diagram and User Journey Map, and generated higher levels of insights. Here are a few questions that we pay most attention to:

We collected interpretation notes from those interviews, analyzed them through Affinity Diagram and User Journey Map, and generated higher levels of insights. Here are a few questions that we pay most attention to:

"How do you feel about the advertisement in TikTok regarding algorithm bias?"

"How do you feel about the current advertisement setting user flow? You can share both the positive and negative views with us."

"Are there more functionalities about advertisement you wish TikTok to add in the future? Why? How do you think this can contribute to deal with the algorithm bias and make the algorithm suit your needs?"

"Is there any concern for the possible functionality you just mentioned?"

"What improvement would you like us to do to the advertisement setting interface/user flow?"

Data analysis and synthesis - Interpretation Session + Affinity Diagram

Through interpretation session, we analyze users' need, motivation and behavior, then we grouped and labeled them in an affinity diagram, synthesizing the insights in first-person angle. Users claimed their preference, activities and findings in TikTok, shared their needs, suggestion, complaint, and concern.

Models - User Journey Map

We built models, user journey map, to help us better summarize and understand users' stance from the interview. This bridges the opinions from users and the following speed dating design implications.

Quantitative Analysis

We have also conducted a survey with 13 questions in 3 categories: app features, advertisements and purchase behaviors on Google Forms to verify our preliminary insights from qualitative research. We received 32 responses, and these results helped us iterate on our insights and following speed dating session.

Most of our participants are between 22-25 years old and use TikTok less than an hour every day.

The survey proves our findings from the interview about the current issues of ad settings and people’s strong need for a tutorial. The ad settings should be easier to find, and the interactive hints are popular among respondents.

The survey verifies that people encounter biased ads on TikTok despite sometimes never realizing it, including buying products from ads (good bias) and receiving inappropriate or fake ads (harmful bias).

The survey unveils respondents’ interest to recommendation ads when they use TikTok to purchase items.

Insights

1

Good and bad bias

2

Desire to mitigate bias

3

Diverse ad preference setting

4

Need for improved navigation

5

Seamless function integration

Users enjoy advertisements with good bias and acknowledge bias-related issues despite not realizing them sometimes.

Personalizing interested categories reflects users' desire to mitigate the bias by controlling the advertisement types they encounter.

Users welcome diverse advertisement preference-setting mechanisms but prefer simple and intuitive ones.

Deep nested ad-related operations and settings create a cumbersome personalization experience for users, leading to a need for improved navigation.

Seamless experience when using TikTok requires the integration of personalization features and existing functionalities.

Low-Fidelity Prototype

Speed Dating

We used speed dating on our insights to help us explore possible futures, validate needs and identify risk factors.

The first one is recognized by most participants.

The first one is recognized by most participants.

- Most participants express interest in real-time feedback from TikTok.

- Users prefer simple and intuitive functionalities.

- Users prefer simple interactions on their TikTok “for you” page.

- Users prefer simple and intuitive functionalities.

- Users prefer simple interactions on their TikTok “for you” page.

- We expected users to consider adding a plugin to eliminate ad biases, but most regard it as too complex and unnecessary.

- Despite the surprise the sharing preference idea brings, users mostly feel weird about it.

- It triggers their unsettledness towards privacy and daily social interaction pattern.

- It triggers their unsettledness towards privacy and daily social interaction pattern.

LOW-FIdelity Prototype

Building on the initial speed dating concept, we conducted a contextual prototyping session using our lo-fi prototype with 7 participants. During these sessions, we identified three critical moments in their user experience: the moment they open TikTok, when they scroll consecutively, and when they watch an ad to the end multiple times. To further explore these moments, we created a physical overlay for our interactive hints and tested them in real-life environments where people typically use TikTok, directly on participants’ phones.

Evalulation

The overall feedback of the prototyping session is positive, our solution increased users' satisfaction a lot. But there are some flaws in physical prototyping process design, which affect the workflow consistency. Considering the final deliver method, the positive feedback weighted more on our final decision.

Final Design Prototype

Here we present our final design solutions for biased ad personalization. Since our solution is represented in the form of extension, we leverage the similar component/style of current TikTok UI design and integrate our extension into the current user flow.

Scenario 1: Tutorial

We’ve moved the deeply nested "Not Interested" button to the right-hand column, positioned next to the "Like" button. When users open TikTok for the first time, the extension will display an overlay tutorial explaining its functionality.

Scenario 2: Interactive Hints

As users continue scrolling through videos, interactive hints will appear. Depending on their choice, the extension will respond differently by either adjusting recommendations (showing fewer or more of similar content) or navigating to the report page.

Scenario 3: Personalization

If users continue watching an ad without taking any action several times, the extension will prompt them to specify their preferences and guide them to the ad personalization page. Here, users can customize their preferences by selecting personalized tags, giving them more control over the ads they see.

Problem Statement

OUTLIER PROBLEM

TEACHABLE MACHINE DESIGN ARGUMENT

DESIGN IMPLICATION

Large datasets with unexpected outliers: Hard to find but may have huge effect on prediction result.

Webcam intruder from collection process: Consecutive capture make the removal process difficult.

Human-introduced diversity: Human can't precisely control the level of outlier insertion, which may impede the prediction accuracy.

Teachable Machine takes all input as training data but has no ability to recognize outlier from the dataset.

Prediction detail hides too deep for easier understanding of users without ML background

User can recognize outlier if we introduce human-in-the-loop methodology

Simple prediction detail with proper explanation can help user find out the potential outlier’s influence.

RESEARCH GOALS

How might we give feedback to let the user learn how would the outlier affect the accuracy of the trained model and thus provide a higher-quality training sample?

- (RQ1): How could the interface alerts people that they might have accidentally introduced outlier into training dataset?

- (RQ2): How could the interface guide the user effectively filter the outlier suggestions from the Teachable Machine algorithm?

- (RQ2): How could the interface guide the user effectively filter the outlier suggestions from the Teachable Machine algorithm?

PROTOTYPe design

Initial Solution 01: Human supervised classification

Initial Solution 02: Passive outlier identification and correction

Final solution: "Alert" Interface and "Outlier filter" Interface.

PROTOTYPING SESSION

I tested the prototype on 5 participants. 1 participant comes from CS background, 2 from design background (architecture), and 2 from interdisciplinary background (HCI & cognitive science/management). Most of them have basic knowledge of machine learning or statistics.

Round 1: Tutorial (Control Group)

Round 2: Train new model with human-filtered training set

Round 3: Apply new model with new training set

Let participants run TM and observe how the current dataset works regard to classifying the designated samples.

Choose the favorite alert system among two alternatives as the test prototype of outlier filter interface in the following rounds.

Let participants optimize the training set manually by removing the suggested outliers in the checklist.

Run TM again on new dataset and observe how the accuracy changes while bearing the outlier pattern in mind.

Let participants manually filter a new dataset based on the learned outlier pattern from Round 2, run TM again and observe how the accuracy changes.

After-session interview and questionnaire

Paper prototype for alert system interface

Outlier checklist

(Red: suggested, Cross: participants' decision)

(Red: suggested, Cross: participants' decision)

Quantitative Analysis

The chart below visualizes the prediction accuracy of sample images in 3 rounds for 5 participants. The suggested round (model filtered) has the highest accuracy. But compared with the initial round, the accuracy of manually filtered one has significantly improved due to potential pattern learning from outlier suggestion function.

Qualitative Analysis

The survey and interview after prototyping session gave me more information beyond the prediction accuracy, participants expressed their finding, feeling and suggestion in this process, making design implication synthesis possible.

Interface Design

For alert interface, the second interface is preferred by participants because:

- They can see the accuracy from it and understand the intention of deleting outliers.

- It encourages them to think about further optimization for better performance.

For outlier filter interface, participants attributed the better performance to the simple and clear design.

- They can see the accuracy from it and understand the intention of deleting outliers.

- It encourages them to think about further optimization for better performance.

For outlier filter interface, participants attributed the better performance to the simple and clear design.

Other Findings

Participants express stronger curiosity to Teachable Machine's mechanism than expected. While they are willing to learn more about it, participants try to keep an interesting distance from the algorithm, round 3 result disappoints participants with professional backgrounds while they are confident about their judgment in round 2.

Insights & Implications

The “alert” interface stimulates their desire to learn what affect their prediction performance.

The “outlier filter” interface provides a possible paradigm for users to learn about how to distinguish outliers and what effect they have on the model.

The “outlier filter” interface provides a possible paradigm for users to learn about how to distinguish outliers and what effect they have on the model.

NEXT STEP

More Research Questions

Participants' feedback, especially their confusions, also brought some interesting questions for further speculation, which includes:

- Why Google puts “under the hood” into the advanced function?

- How to balance human bias (users’ choice) and ML model bias (algorithm bias)?

- How well could the filter system perform on unpredictable new datasets (generalization)?

- Why Google puts “under the hood” into the advanced function?

- How to balance human bias (users’ choice) and ML model bias (algorithm bias)?

- How well could the filter system perform on unpredictable new datasets (generalization)?

Interface Iteration Suggestion

They also provided valuable suggestion on the further interface iteration, which includes:

How to view the dataset:

- Row layout (integrated into the current TM upload section)

- Page view (added behind the upload section)

How to identify the marked outliers:

- In-site marking

- Outliers section for all groups

- Outliers section each group

How to backtrack:

- Archive section (backup the best-performance filtered dataset)

- Row layout (integrated into the current TM upload section)

- Page view (added behind the upload section)

How to identify the marked outliers:

- In-site marking

- Outliers section for all groups

- Outliers section each group

How to backtrack:

- Archive section (backup the best-performance filtered dataset)

the project

Piggyback Prototyping for testing the effectiveness /likeability of social bots in social platform connection

RESEARCH GOALS

How effective does the social bot encourage private one-to-one connections by Direct Message of processed information on Twitter (effectiveness) and how would people feel about the connection method (likeability).

Q: What is one-to-one connection?

A: The ability to promote connections(following) with strangers and connections(chatting) between followers

A: The ability to promote connections(following) with strangers and connections(chatting) between followers

strategy

Recommends similar friends/topics based on the similarity of recent liked tweets to exam:

- How does the follow-up bot message effectively connect users?

- How does participants' attitude to this method affect their behavior?

- How does participants' attitude to this method affect their behavior?

PROTOTYPING SESSION

I chose participants based on their geo location on Twitter. Most of them were living in Pittsburgh.

Recommendation System: Scraping from Twitter

As part of the recommendation bot, I wrote a python scraping script to calculate the similarity between two users. To find potential recommended users, I extracted those who liked the same tweet as the participant and analyzed the similarity of their recently liked tweets. If their similarity surpasses the set threshold (85%), I put the first three hashtags related to a specific topic in the conversation as the content for connection.

Recommendation System: Conversation Tree

I built a conversation tree to organize how to send prompts to participants based on the purpose of current prototyping session and tier response.

Recommendation System: Human bot

After finishing the above preparation, I created an “official” Twitter account and began to send follow-up prompt based on the response manually (don't have the automation skill at that time lol). If the participant was willing to assist this project, it will receive a questionnaire.

Prototyping Session: piggyback prototyping and persona interview

The prototyping session comprised of two parts, each of them has 50 participants. Piggyback Iteration II excluded the users who has followed the participants. Because the result didn't get enough feedback for analysis, I conductedpersona Interview

Quantitative Analysis

The effectiveness is not optimistic from the percentage of users following the prompt. I tested on 100 random participants but the critical mass for this prototyping is unclear yet.

While not increasing the rate of being blocked (reducing likability), the following-up question leads participants to check and follow the instruction implicitly.

While not increasing the rate of being blocked (reducing likability), the following-up question leads participants to check and follow the instruction implicitly.

Qualitative Analysis

From the interview, the participants thought the following factors affect participants' willingness to respond to the bot:

- The privacy concern: if the bot is certified will affect users' trust on their message. Many potential participants choose to close the DM entrance to strangers or include no DM message in their profile.

- Human-like level: human-like profile/response will gain more empathy from users.

- Algorithm transparency: opaque algorithm will frighten those who received the message from nowhere.

- The privacy concern: if the bot is certified will affect users' trust on their message. Many potential participants choose to close the DM entrance to strangers or include no DM message in their profile.

- Human-like level: human-like profile/response will gain more empathy from users.

- Algorithm transparency: opaque algorithm will frighten those who received the message from nowhere.

Insights & Implications

Direct Message is an annoying way to send bot messages and may explain why only some people respond to the bot.

The critical mass for this prototyping is unclear yet, more than 100 samples may be needed to collect adequate feedback for analysis.

The following-up question raises participants' attention and curiosity positively. However, the effectiveness is not optimistic from the percentage of users following the prompt.

The critical mass for this prototyping is unclear yet, more than 100 samples may be needed to collect adequate feedback for analysis.

The following-up question raises participants' attention and curiosity positively. However, the effectiveness is not optimistic from the percentage of users following the prompt.

NEXT STEP

This prototyping exercise is barely a successful attempt, but it gave me many insights about the next step:

Connection bot design:

- With a better programming skill, I can consider automate the whole process for larger piggyback size.

- There is no mechanism encouraging participants to give feedback about the conversation (if they'd like to receive the message and how they feel about it). Meanwhile, conversation tree should also encourage participants to respond to the bot prompt implicitly.

Prototyping algorithm:

- The similarity can also include profile/hashtag/new tweets/reply, a more synthetic algorithm may improve the recommendation accuracy and lead to more positive feedback.

- With a better programming skill, I can consider automate the whole process for larger piggyback size.

- There is no mechanism encouraging participants to give feedback about the conversation (if they'd like to receive the message and how they feel about it). Meanwhile, conversation tree should also encourage participants to respond to the bot prompt implicitly.

Prototyping algorithm:

- The similarity can also include profile/hashtag/new tweets/reply, a more synthetic algorithm may improve the recommendation accuracy and lead to more positive feedback.

Ceramic Guiding System

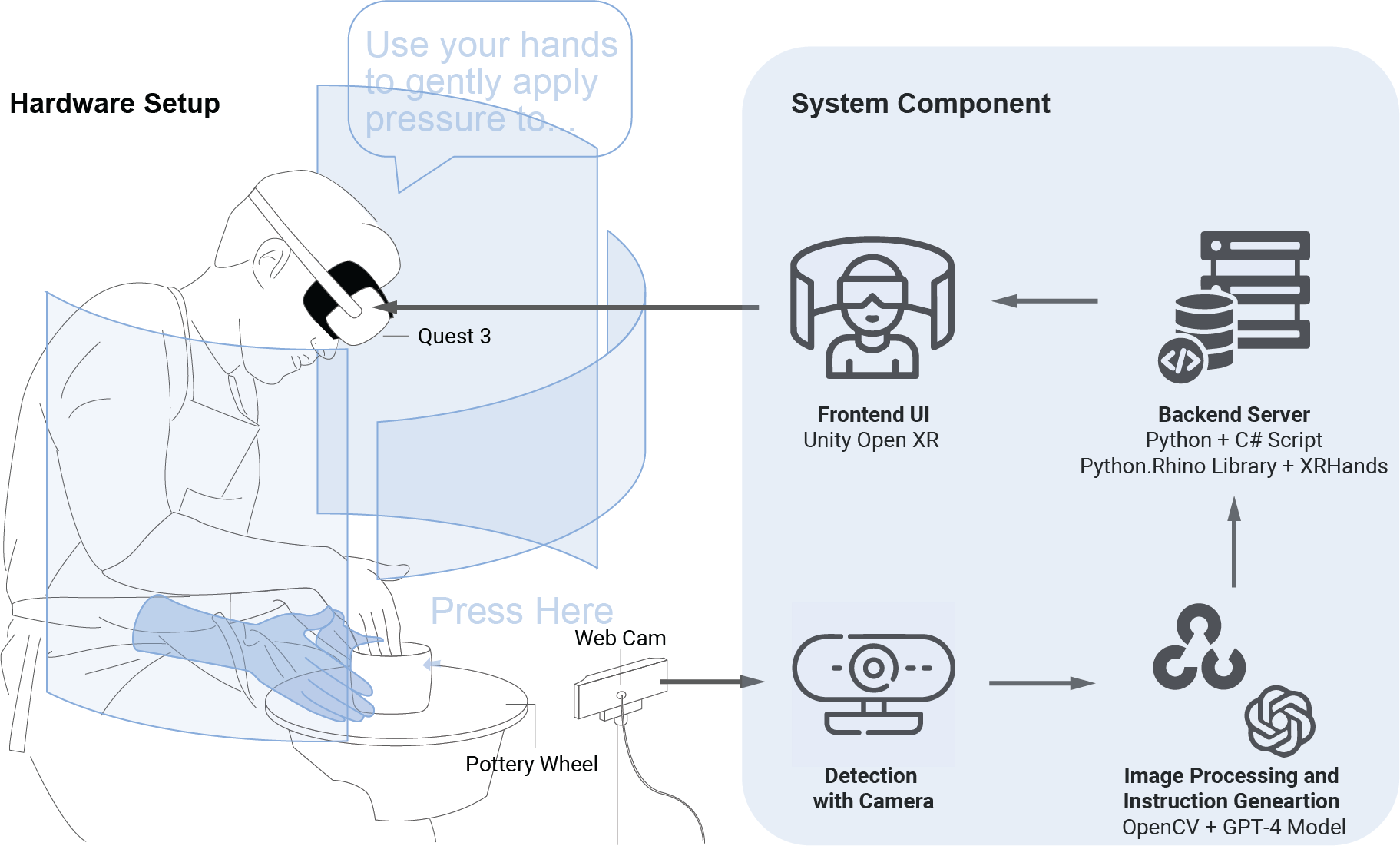

The system’s functionality is derived from design goals, with specific features informed by findings from the auto-ethnographic study. In this section, we present the system setup and provide a detailed description of its functionality with a brief overview of its technical implementation.

System Setup

We built our ceramic corner with a VEVOR 9.8" LCD Touch Screen Clay Wheel GCJX-008 as the main device and Logitech C920 Webcam to capture the shape data. To ensure clear distinction between the clay and the background, the corner is wrapped in black cloth, and the basin of the pottery wheel has been removed. Our tool is built on Meta Quest3, utilizing its passthrough mode to allow users to interact with their surroundings.

The setup process is straightforward: users wear the headset, sit in front of the pottery wheel, select the appropriate speed and mode and initialize the selected tutorial using the controller. They then practice ceramic-making by hand while using voice commands to control the system’s guidance.

The setup process is straightforward: users wear the headset, sit in front of the pottery wheel, select the appropriate speed and mode and initialize the selected tutorial using the controller. They then practice ceramic-making by hand while using voice commands to control the system’s guidance.

Functionality

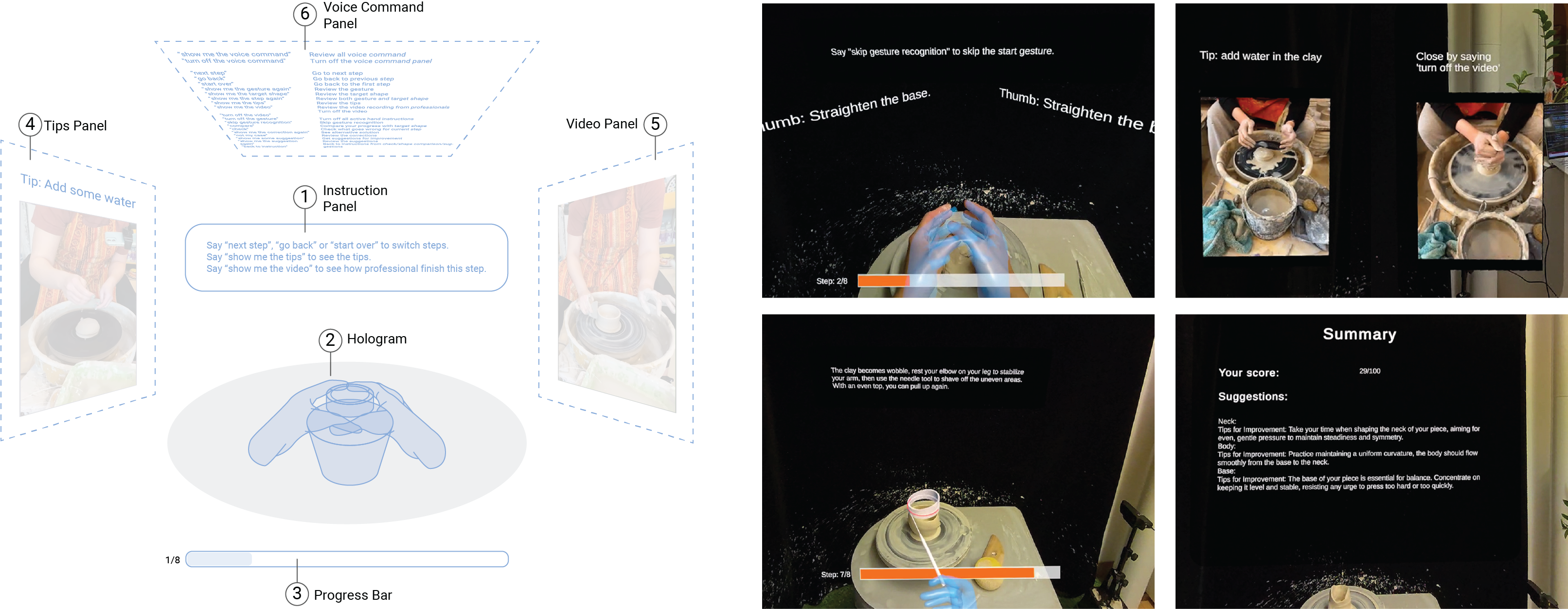

We propose two core features in the system, customize and organize them for the two user levels according to their respective needs identified during our formative study.

Core Features

We identify two core features in our system: Hand and shape holograms as immersive tutorial and spatial-based correction, guidance, and suggestions as feedback.

Hand and Shape Holograms

Our system offers hand and shape holograms to display the first-angle view of each step and the target shape. The gesture holograms are created based on schematic diagrams, modeled in Blender, exported as .fbx animation and imported into Unity as prefabs. For gesture recognition, we piggybacked the XRHand package to visualize the gesture recognition as text-based instruction. The language is simplified to make it easier to understand, ensuring accessibility for learners of varying skill levels.

Spatial-based Feedback

Our system provides three types of feedback: correction, guidance and suggestions based on clay shape. We use the Python-based computer vision library OpenCV to capture the shape outline and identify critical positions using the Rhino.Python library. Progress is then evaluated by comparing the current shape to the target shape, generating similarity scores and boolean values based on quality rules. The evaluation results are further processed in two ways: (1) Text-based suggestions are generated using personalized prompts of different skill levels with OpenAI API based on the comparison of identified critical position locations. (2) Several profile slices are created at the intersection points between the target shape/current shape outlines, which are then swept to create spatial shape points. Both data outputs are sent to the system via servers and visualized as: (1) an overlay on the anchor with three colors: red for "push inward," green for "correct shape," and blue for "pull outward." (2) multimodal tutorial information

For Novice Learner: Step-by-step Learning

Novice learners usually have limited proficiency in ceramic making. They are less familiar with each step, lack practice for wheel-throwing techniques, and need basic feedback to improve their practice. The system provides detailed step-by-step tutorial and easy-to-understand feedback during and after the learning process.

Learning Flow: Watch, Imitate and Practice

From ethnographic study, we observed that students observe and follow their instructors, and their gestures are occasionally corrected. Correct and precise gestures are critical in wheel-throwing due to the high precision required in ceramic making. However, traditional teaching methods often struggle to provide real-time feedback on gestures during the shadowing process. Our system adopts a similar procedure but enhances the experience: users first watch a holographic animation to observe the gestures, then imitate the starting gesture with real-time hand-part suggestions and practice what they have learned from the hologram.

Spatial learning: Hologram, Video and Tips

Wheel-throwing is a three-dimensional task, requiring learners to shape clay in space. From our observation, in traditional studio settings, observation alone is often insufficient to capture the nuanced details of hand movements and their interaction with clay; even with hands-on guidance, the learner’s observation is typically limited to a third-person view. Our system leverages MR to create a spatial immersive display, providing 1) first-person hologram overlay to display the relationship between hands and clay, allowing users to closely observe and understand the intricate details of the gesture; 2) third-person demonstration videos and tips from experts to restore the knowledge from traditional studio by conveying contextual factors such as moisture, pressure, and speed. The system enhances the learner’s understanding of both explicit and tacit aspects of ceramic making, bridging the gap between traditional and immersive learning experiences.

Learn from feedback

For novice learners, it is crucial to receive feedback during their learning journey when they encounter difficulties and learn how to improve for their next attempt. Our system fulfills these needs by providing correction during ’critical incidents’, restoring the hands-on instructional experience of in-person learning. The system also generates ad-hoc summaries with suggestions for the final clay shape, allowing learners to reflect on their current attempt and improve their performance in the next round.

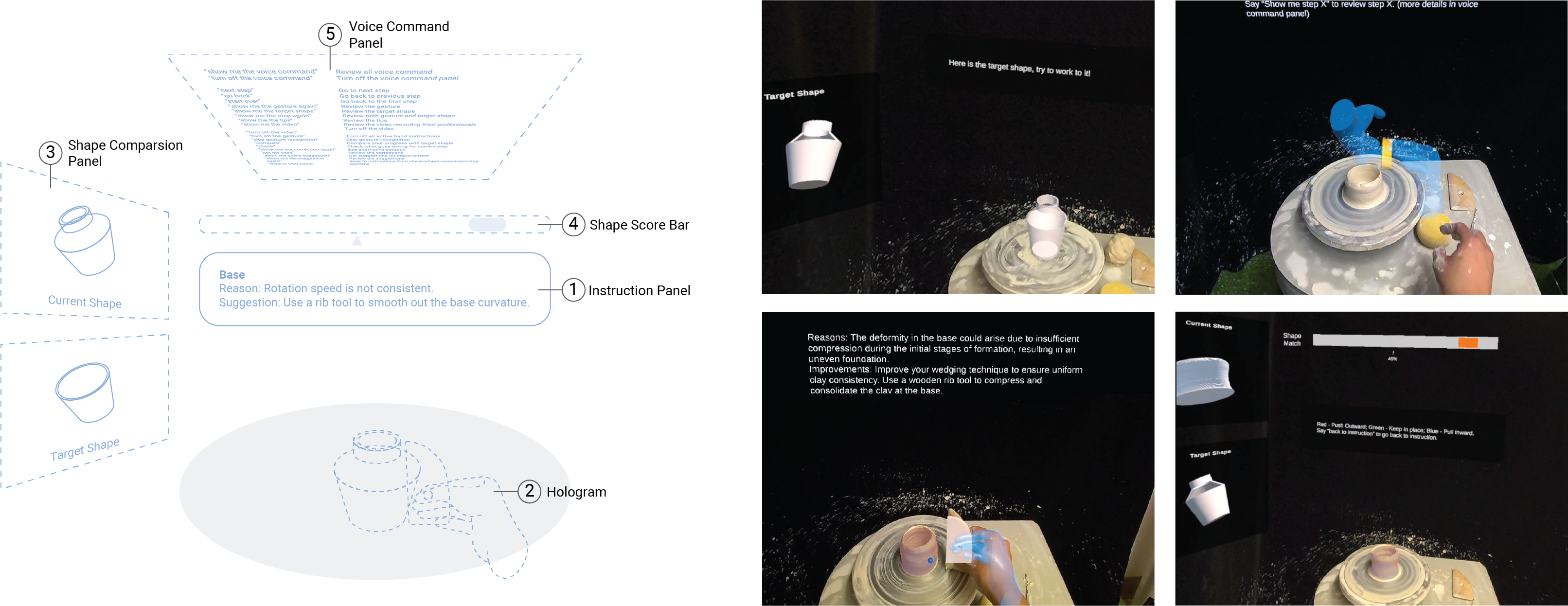

For Experienced Practitioner: Guiding and Learning

Experienced practitioners have acquired necessary skills to make ceramics but still have space for improvement. The system aims to provide them with the freedom to work independently while offering support to refine their pieces, enhancing their understanding of the craft through augmented practice.

Revisit and Reference

During our ceramic study journey and pilot studies, we observed the need among experienced practitioners to refresh their skills. They can revisit gestures and shape holograms to reinforce their techniques at any time. The system also visualizes the current and target shape in real-time on the side panel for reference, the practitioners have the flexibility to make pottery at their own pace while periodically refer to the update as needed.

Shape Guidance

For experienced practitioners, the goal shifts from simply creating a decent ceramic shape to achieving perfection. We provide real-time color-coded overlays to guide them in shaping the clay by comparing the current shape to the target shape during the process. They can refer to the overlay and fix the shape accordingly.

Multimodal Suggestion

For experienced practitioners, their advanced knowledge of skills and tools lead to higher expectations for more detailed and precise instructional assistance. Expanding on the summary in elementary mode, our system provides multimodal suggestions based on essential pottery parts, integrating text, audio, and gesture/shape hologram. This multimodal approach provides more detailed information, making it easier for experienced users to absorb and expand their knowledge.

Demo

BACKGROUND

The research explores LLM hallucination in the entanglement with embodied medium. As an emerging concept, a design-oriented perspective is yet to be explored. Current algorithmic experience (AX) prototyping method scratched the surface of negative side of algorithm but didn’t elaborate how to deal with “erroneous or unpredictable” result, and it only limits the algorithm in virtual and tangible form and explore it from the human-centered design perspective. Thus, the research proposes to prototype LLM hallucination by expanding the definition to a broader embodied medium and use the AX prototyping method for speculation. To better convey the experience, the research focuses on two encounter scenarios: investigate the potential of multimodality as a convey medium from first-person angle and integrate the speculative design with a broader embodied medium beyond material from third-person angle.

Territory Map of current research

Narrative Medium -

first-person and third-person

first-person and third-person

RESEARCH QUESTION

When LLM hallucinations are integrated into everyday embodied interaction experience:

(RQ1) - How might users recognize, interpret, and relate to LLM hallucination?

(RQ2) - How could designers leverage insights from the users to engage with hallucinations through embodied mediums and methodologies?

(RQ1) - How might users recognize, interpret, and relate to LLM hallucination?

(RQ2) - How could designers leverage insights from the users to engage with hallucinations through embodied mediums and methodologies?

Hypothesis

Algorithmic Experience in Interaction Process

Experience from algorithm logic/mechanism: This experience stems from how algorithms work. For prediction-based algorithm in machine learning, the input-output relationship clear. In contrast, LLMs generate outputs through predicted token sequences, where user influence is more implicit due to nature-language-based output, creating a different interaction.

Experience from algorithm-generated content: This focuses on the type of content produced by algorithms. For LLMs, text, speech, images, or 3D models each provide distinct experiences, shaped by the modality in specific scenarios.

Experience from human interpretation of output: Users interpret algorithm outputs based on their socio-technical context, leading to varied reactions and experiences shaped by how they engage with the results.

Experience from algorithm-generated content: This focuses on the type of content produced by algorithms. For LLMs, text, speech, images, or 3D models each provide distinct experiences, shaped by the modality in specific scenarios.

Experience from human interpretation of output: Users interpret algorithm outputs based on their socio-technical context, leading to varied reactions and experiences shaped by how they engage with the results.

Hallucination Experience Glossary

LLM hallucination here synthesizes various technical issues that result in deviated responses. These issues stem from different causes and produce varied outcomes, shaped heavily by social context. I propose the following glossary serving as entry points into exploring the imaginative potential of LLM hallucinations:

Empathy – Emotional experience from a technical flaw: This perspective arises from how audiences interpret LLM hallucinations. While some express frustration, others view hallucinated responses as offering companionship, finding emotional support in erroneous yet benign feedback. The technical flaw becomes a personal, emotional experience.

Serendipity – Alternative experience resonating with social relationships: This reframing highlights hallucinations as responses that seem socially connected yet unexpected, fostering curiosity, empathy, and reflection. These moments of serendipity help users connect with broader social contexts in meaningful ways.

Alchemy – Creative experience from hallucinated content: In content generation, hallucinated responses, though factually inaccurate, can spark creativity. Users may find inspiration beyond their knowledge or expectations, turning hallucination into a catalyst for creative exploration.

Empathy – Emotional experience from a technical flaw: This perspective arises from how audiences interpret LLM hallucinations. While some express frustration, others view hallucinated responses as offering companionship, finding emotional support in erroneous yet benign feedback. The technical flaw becomes a personal, emotional experience.

Serendipity – Alternative experience resonating with social relationships: This reframing highlights hallucinations as responses that seem socially connected yet unexpected, fostering curiosity, empathy, and reflection. These moments of serendipity help users connect with broader social contexts in meaningful ways.

Alchemy – Creative experience from hallucinated content: In content generation, hallucinated responses, though factually inaccurate, can spark creativity. Users may find inspiration beyond their knowledge or expectations, turning hallucination into a catalyst for creative exploration.

Method

Prototyping Hallucination Experience

Prototype 01: Moodie Assistant

Key words: Empathy, Emotional projection, Interpretation ambiguity

Moodie Assistant depicts the algorithm experience as an emotional response to hallucination. This prototype takes the form of a voice assistant with a gauge indicating the hallucination level. It’s equipped with a series of remotes to enable users/audiences to express their emotional experience during the conversation with different modalities and granularity on hallucination control. Different roles, users and audience, have different emotional reactions in their interaction with the devices. The prototype’s embodiment gives us the medium to discuss the interpretation ambiguity.

Key words: Empathy, Emotional projection, Interpretation ambiguity

Moodie Assistant depicts the algorithm experience as an emotional response to hallucination. This prototype takes the form of a voice assistant with a gauge indicating the hallucination level. It’s equipped with a series of remotes to enable users/audiences to express their emotional experience during the conversation with different modalities and granularity on hallucination control. Different roles, users and audience, have different emotional reactions in their interaction with the devices. The prototype’s embodiment gives us the medium to discuss the interpretation ambiguity.

Prototype 02: Whisper Web

Key words: Serendipity, occasional encounter situated in social context

Whisper Web explores hallucination experience as serendipity. The prototype embodies the form of a chatbot with personalized context “collection” to simulate LLM “training set.” The prototype reflects on the reaction when the hallucination provides deviated context implying different social relationships. Instead of intervening directly, the prototype’s embodiment leverages visualization medium to observe and document how the hallucinated info leads to the tension between human and conversational agents.

Key words: Serendipity, occasional encounter situated in social context

Whisper Web explores hallucination experience as serendipity. The prototype embodies the form of a chatbot with personalized context “collection” to simulate LLM “training set.” The prototype reflects on the reaction when the hallucination provides deviated context implying different social relationships. Instead of intervening directly, the prototype’s embodiment leverages visualization medium to observe and document how the hallucinated info leads to the tension between human and conversational agents.

Prototype 03: Mindscape

Key words: Alchemy, turning hallucinated content into creative ideas, insights, and innovations

Mindscape explores the experience by seeking creative opportunities from LLM hallucination. The prototype is an XR application on the immersive environments platform to let users ideate and create an alternative world with the hallucinated LLM model, which focuses more on the brainstorming workflow and ideation/iteration. The prototype aims to investigate the effect of hallucinated-generated content on creativity. The embodiment mitigates reality constraints and augments imagination to the maximum.

Key words: Alchemy, turning hallucinated content into creative ideas, insights, and innovations

Mindscape explores the experience by seeking creative opportunities from LLM hallucination. The prototype is an XR application on the immersive environments platform to let users ideate and create an alternative world with the hallucinated LLM model, which focuses more on the brainstorming workflow and ideation/iteration. The prototype aims to investigate the effect of hallucinated-generated content on creativity. The embodiment mitigates reality constraints and augments imagination to the maximum.

Speculative Film - Experience Narrative

User(pilot) study

Six participants were recruited through word of mouth for the pilot study. All participants are MSCD students/alumni. All participants have rich experience in design practice and are familiar with LLM-related applications/tools. They were paired into three groups, and three observation studies were conducted on three consecutive days. In the observation studies and the following interviews, one can listen to what other people “say” and watch what other people “do”. After analyzing the collected data with affinity diagrams and interactive visualization, a workshop was conducted in the following week for introspection and explorative participatory design to propose “solutions” as both design experts and user role.

findings

(Note: Red: Recognition; Yellow: Interpretation; Blue: Relation)

Recognition

Intepretation

Relation

1

LLM Hallucination Characteristics

False positive from camouflage

Empathy from modal limitation

Doubt from aligning human expectation with model’s interpretation

Complex emotion response from ambiguity

Confusion from factual subtlety

2

Embodied medium's effect on LLM hallucination experience

Medium’s explainability on hallucination

The origin of hallucination

Empathy from engagement with mediums

Medium’s indication ability on hallucination

Interpretability of modality

Match between hallucination and medium nature

Learning burden of medium

Interplay between hallucination and prototyping technique

3

Interactive pattern of LLM hallucination experience

From irrelevant response content

Triggered by irrelevant hard-to-interpret response

When hallucination aligns with user intention, emotion, and social distance (inner norm)

From outlier response pattern

Triggered by resonation with alternative context

When respecting fine line between error and hallucination (value judgment)

insights

1

Prototyping on the fine line of recognition and relation

2

Prototyping hallucination and medium’s nature

3

Prototyping for critical, impactful moments

4

Prototyping with minimal learning burden

Designers need to balance clear recognition of errors and hallucinations in order to evoke empathy and create deeper connections with the experience. This balance ensures that participants can relate to the hallucination without being distracted by obvious mistakes.

is hallucination more suited to factual knowledge or abstract concepts, and does the medium enhance its explanation or engagement? Prototyping should align with these characteristics, not only to inform future designs but also to communicate more effectively with the audience.

While some hallucinated moments, like factual errors or striking out-of-context responses, are key to the experience, others are benign or deeply hidden and can be overlooked in prototyping. Instead of monitoring everything, prototypes should focus on critical moments to enhance users' perception.

When prototyping works as a probe to explore design implications and alternative possibilities, designers should use rapid, easy-to-understand methods to reduce both the objective burden of the prototyping medium and the subjective burden of complex or unclear speculative discourse.